Reimagining Software Part 3: Why We Should Embrace Agents

The final part of a 3 part essay digging into how I imagine software (e.g. the application layer) evolves with LLMs.

The Rise of Agents

In the last ~6 months, we’re seeing glimpses of the “agentic” future in everyday life. The term “agents” has gotten a lot of hype in the AI world, referring to how our software will do more for us. Agents are touted as capable of independent action, decision-making, and goal pursuit. 2025 is considered “the year of agents”.

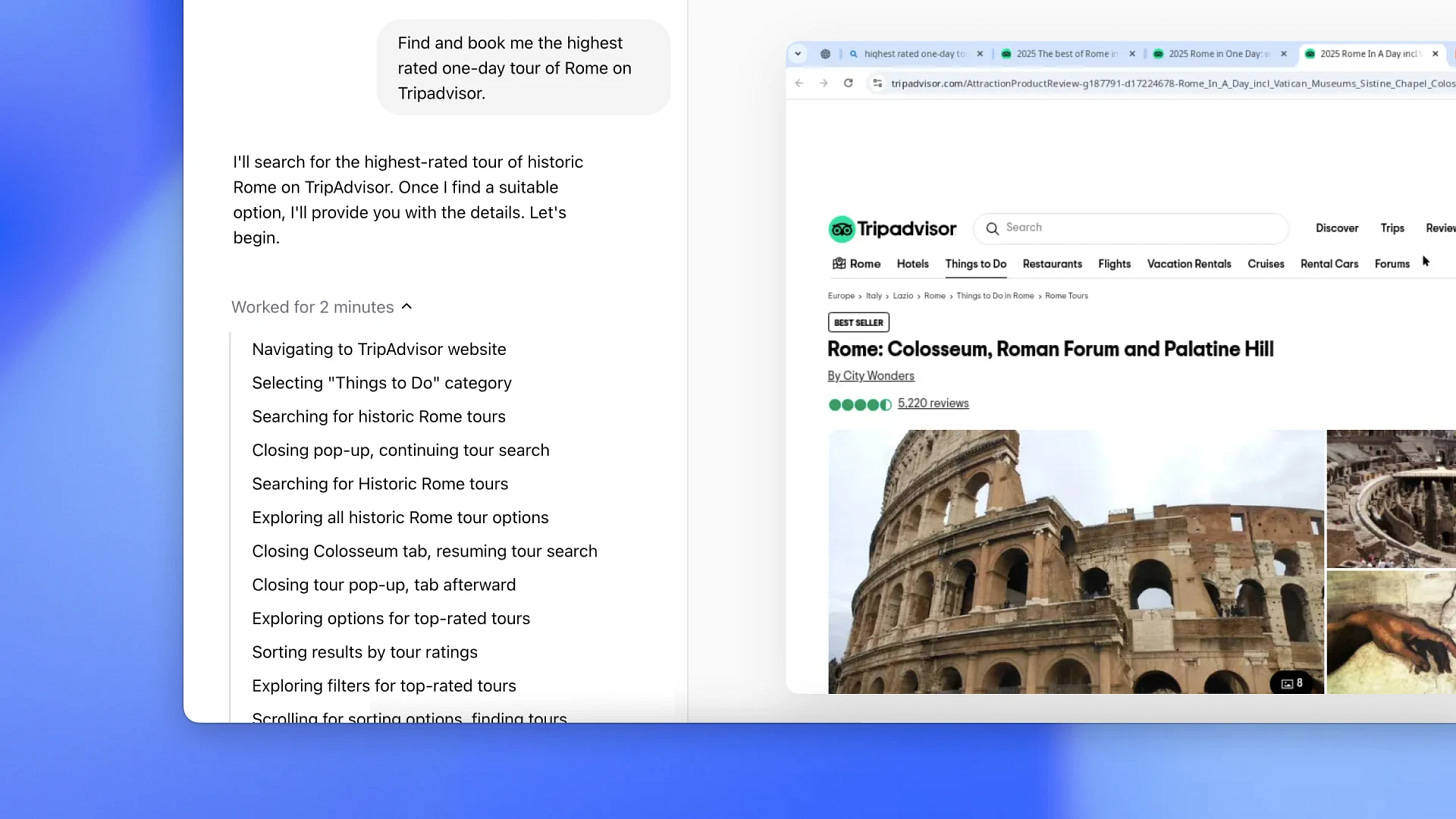

Last month, OpenAI introduced its first mass-market agent called Operator:

Operator can be asked to handle a wide variety of repetitive browser tasks such as filling out forms, ordering groceries, and even creating memes.

And given their distribution power, it became many people’s first real taste of the agentic future of software. The overall reaction has felt mixed. I played around with it and tweeted my initial impression:

I’m excited because I’m seeing the potential for this new paradigm. I first tried Claude’s computer use when Anthropic announced it in Oct 2024. It felt like a cool demo but way too clunky for real use. Then in December, I tested Google’s Stream Realtime with Gemini 2.0 Flash. It was magical. You could talk to Gemini over voice, video, or by sharing your screen and get near-instant feedback. I shared my screen in real-time and asked Gemini to help me make sense of a complex chart I was having trouble following. I felt the co-presence of agents for the first time: like someone else was in the room with me.

With these experiences, I’m forming a rough mental model for agents:

Asynchronous agents: think of them as autonomous task completers — working in the background to execute tasks for you (e.g. Operator auto-filling forms, AI email assistants, Cursor auto-completing code). Their capabilities are tied to reasoning models and will get better at planning and multi-step execution.

Synchronous agents: think of them as co-present helpers. They can help you with real-time tasks and you interact with them with low-latency forms. A digital companion at first on existing surfaces, but eventually one that can take on new devices and new physical form factors.

In this article, I’ll explore 2 questions about the future of software with agents:

Can agents live up to the hype?

Should humans let agents use software for us?

Agents: Revolution or Just Hype?

One of the biggest criticisms of agents is that they simply don’t work. Operator struggled with simple tasks like automating spreadsheet entry. Or Gemini has limits on how long you can stream to it. The most common critiques are:

Agents fail at complex, multi-step tasks

Agents lack memory and continuity

Latency and cost make real-time assistance impractical

Contextual understanding is weak

This criticism is fair. We’re seeing some great demos, but there are plenty of rough edges. I’m betting on the trajectory, and that we’ll address these concerns. Here’s why:

Stronger Reasoning Models

Until now, most progress in LLMs has come from scaling pre-training—feeding models more data and compute to improve next-token prediction. But reasoning is still in its early days, and only now are we seeing real advances in inference-time capabilities like planning, self-correction, and multi-step problem-solving. We’re seeing this with OpenAI’s o3 and DeepSeek’s R1. During the training of DeepSeek R1, researchers saw self-correction when the model was solving complex problems. The model interrupted its own reasoning process to pause, reevaluate, and optimize its approach — all autonomously!

A notable example of this occurred when the model, while solving a mathematical equation, interrupted itself, stating:

“Wait, wait”. That’s an aha moment I can flag here.”

The model literally explains its reasoning and recognizes its own mistake—an aha moment in real-time! I expect agents will become more adaptive, handling unexpected changes in workflows.

Better Memory & Long-Term Context

Much of the AI we use today is stateless, so each interaction feels like a fresh conversation with no recollection of the past. This is one of my major complaints with ChatGPT…even with explicit instructions, it will forget key details and hallucinate to pretend it remembers. Memory is one of the most important areas of model research ([2501.00663] Titans: Learning to Memorize at Test Time). This problem is important to leveraging AI’s potential and we can expect improvements to come. Just yesterday, Google Gemini released an update that lets users ask Gemini to consider past chats to craft its responses.

We need agents that can understand our habits and preferences, and tailor their actions accordingly. Agents that can learn from their past mistakes will also perform better in the future.

Lower Latency & Cheaper Compute

Running agents in real-time is expensive today. But we’re seeing major advances in inference efficiency (much of which DeepSeek shed light on): model distillation (smaller, faster versions of the best models), optimized AI chips (new GPUs and TPUs for lower-cost inference), more efficient architecture (Mixture of Experts to reduce compute costs). It’s hard to predict when these efficiency gains will plateau, but the agents we’re using today aren’t maxed out.

We should expect sync agents to feel more like real-time AI companies with minimal delays. This should enable fluid interaction, almost like talking to a human.

Stronger Context Awareness

A big problem is agents today operate in a vacuum — they’re limited to the context of what we explicitly told them or what they can see on screen. But I expect the future of agents is multimodal:

• Vision (e.g. understanding screenshots, interpreting complex UI).

• Audio (e.g. real-time conversations, voice-controlled assistants).

• Code execution (e.g. actually running scripts rather than just suggesting them).

To me, it’s inevitable that agentic AI needs more control over the stack. I’m excited to see products like Dia by the Browser Company emerging in this space.

AI won’t exist as an app. Or a button. We believe it’ll be an entirely new environment — built on top of a web browser.

Or maybe even bigger, bolder bets like /dev/agents that promise a greater reimagination from the OS level.

The skeptics are right that today’s agents are far from perfect, but the trajectory is clear.

Should We Let Agents Use Software for Us?

If you accept that agentic technology is only going to get better, then there’s an uncomfortable question that follows. If agents can browse and use software for us, should we let them? What are we going to do instead?

I shared my view in a recent Figma blog post about the rise of agentic AI:

We weren’t born to browse the web. We were born to be curious, create, and connect. Agents are simply the next leap—another level of abstraction that lets us focus on what matters

Humans are tool-builders. Over our history, we’ve invented new tools to harness more capabilities. There’s an arc of abstraction across all of our progress as a species. We invent systems and processes to create leverage. It’s how one person can do more than one person’s physical constraints. Computing in particular took this to another level as we could create experiences at scale. The Internet democratized knowledge and opened opportunities to create value across the world. It’s one of our best inventions ever.

Now we’re at the cusp of another leap. Agents will soon browse the internet and use our computers for us—and we should let them. As amazing as our technological innovation has been, there is still friction in using computers and phones. I’d let an agent like Operator handle my more painful tasks.

Is that a good thing? What does it mean I should do with my newfound time? To answer that, I’m reminded of Ben Thompson’s Tech’s Two Philosophies:

Computers doing things for people: this philosophy asserts we should leverage technology to perform tasks on behalf of users, delivering convenience and efficiency.

Computers helping people do things: this philosophy focuses on empowering users to do more themselves. Technology provides the platform and tools to enhance human creativity and productivity. More people can create and innovate.

I believe we need both.

The Age of Agents: A New Creative Renaissance?

What if agents don’t just free up our time by doing things for us—but change how we think about creativity itself?

I don’t see agents as just a tool for convenience—I see them as a catalyst for change. By abstracting away routine interactions with software, they don’t just make life easier; they create space. And what we choose to do with that space will define the future of work, creativity, and even identity.

For years, we’ve drawn a hard line between creating and consuming. We think of creativity as making something from scratch—writing a novel, designing an app, composing music. But in reality, everything we do online is a form of expression. Curating, sharing, remixing, reacting—these are all acts of participation, even if they don’t feel like traditional creation. The idea that consuming is passive and creating is active is a false divide.

Agents might be what finally break it.

They lower the friction to acting. Instead of staring at a blank page, you have a starting point. Instead of needing to master a tool, you can describe what you want and refine it. Instead of passively scrolling, you can remix, contribute, and shape what you take in.

Some will use agents to optimize their lives, delegating every possible action to free up time. But the real opportunity lies in using agents to amplify our potential—to push the boundaries of what’s possible, to dream bigger. And, crucially, to rethink what it means to create.

Creation has always been about more than just making things from scratch. It’s about participating, shaping, and building on what already exists. In the same way computers empowered an entire generation to create new industries, agentic AI will shape the next era of software—not just by automating tasks, but by expanding our ability to build, express, and explore.

The skeptics may be right that today’s agents aren’t there yet. But history tells us that when the tools get better, the world changes.

And when the tools start working for us, perhaps we finally get to focus on what we were meant to do all along: create.