Reimagining Software Part 2: Why Software Should Feel More Like Playing Video Games

A 3 part essay digging into how I imagine software (e.g. the application layer) evolves with LLMs.

In part 1 of this series, I explored how AI can make our software feel more personalized to us. In part 2, I’ll examine how software can feel more dynamic & fluid. We’ll briefly visit the history of software design, its constraints, and I’ll make the case for why we need fluidity.

How We Navigate Software

The first design-heavy project I led as a PM was a large homepage redesign. My partner-in-crime was my designer and now close friend, Chris Wang. He schooled me on the world of interaction design, human computer interaction (HCI), and user experience. It was the first time I learned about the principles of wayfinding.

Wayfinding in software refers to how users navigate digital experiences—through navigation menus, breadcrumb trails, icons, colors, and other design elements to help them understand where they are. But Chris also showed me how wayfinding is rooted in real-world design. In physical spaces, we orient ourselves using maps, signage, landmarks, and other navigational aids. Together, we studied examples ranging from airport signage to the grilles of automobiles. I learned how familiarity, cognitive friction, and predictability all play a role in guiding people seamlessly through spaces.

From these experiences, I internalized a powerful lesson about software: our intuition for using it often mirrors how we interact with the real world. And I developed a guiding hypothesis:

Our digital worlds shouldn’t be designed as separate from our physical worlds—they’re extensions of how we already think, move, and learn.

My view is that the best designed software has a sense of effortlessness to it — users fall through the product without thinking too much. I believe you can learn a lot about how to craft these experiences by asking: “how can software better align with the way we naturally navigate the world?”

The Evolution of Software Design

At its core, I see software design as a necessary abstraction. It follows the arc of computing technology becoming more widely accessible to the masses. Good design makes complex systems usable. It allows us to leverage powerful technology by lowering the barrier for use. I believe design should act as a bridge: translating complexity into intuition, and enabling us to do more with technology’s potential. When done well, design can also amplify desire to use something. This is why the way something looks can appeal to us emotionally.

Over the past ~50 years, software design has evolved with one clear goal: making computing easier and more accessible to more people.

Desktop-Focused Applications (1970s - early 2000s)

Software was built for specialists — developers, businesses, and technical users

The interfaces were largely text-based, requiring users to memorize commands

Navigation was functional, and built around how hardware worked rather than how humans think

Accessibility was an afterthought. Users adapted to the software, not the other way around

Web & Cloud (Mid 1990s to 2010s)

With the rise of the Internet and cloud computing, we shifted our focus to web-based applications that you could access via browsers

Interfaces became graphical and structured with menus, hyperlinks, search bars

Software design was still hierarchical. Users learned a system’s structure, not the other way around

Mobile apps (Late 2000s to present)

The iPhone launched in 2007 and opened up new ways of interacting with software. Good design drove distribution for apps in an increasingly crowded market place

Mobile introduced new paradigms like touch gestures, voice commands, and small form factors for on-the-go use cases

Mobile software was still fairly structured: menus, grids, and predefined workflows

The Problems

Software has become more user-friendly over time, but it still has a long way to go. Here’s why:

Software is still finite, by definition

Most applications today are built around fixed structures. Every feature, screen, and interaction is pre-designed. I remember working on a dynamic landing page engine for a startup 10 years ago. I focused on creating templates that inserted dynamic values to give the appearance of fluidity. But the interface was still in a rigid structure that had to be predefined.

Using software is still work

Despite the improvements, software today demands cognitive effort. Users must think about tasks, navigate menus, and operate within constraints set by interface designers. It’s like every app or website you enter is a new home…you’re familiar with general home configurations. But in each home, the layout is slightly different…you look at what’s on the walls, or where the bathroom is, or find the staircase to the second floor. I’d argue that the cognitive effort required to learn new apps and websites is still more work than it ought to be.

Software is largely context-blind

As I laid out in part 1, most software today doesn’t fully understand user’s context. This leads to additional friction when products don’t remember what you’ve already done or understand what you might want to do. It’s frustrating when you have to repeat yourself to an Instacart support agent after getting disconnected or manually reset your route preferences in Google Maps when driving late at night. Software still relies on us to do the heavy lifting of providing context.

The Future of Software: More Adaptable, Less Static

Enter Generative AI. It’s possible to create fluid, adaptable interfaces at scale. The underlying breakthrough is the cost of generating frontend content is near-zero. In the past, making software feel personalized required manually designing states for every possible use case. Now, LLMs can handle that work dynamically, making software reactive and responsive in real time.

One way to think about this shift is through video game design. In games, environments don’t follow static scripts—they evolve based on player interactions.

We see a glimpse of this in Her, where the protagonist plays a game where an avatar insults him back in real time. The game isn’t following a strict, pre-written script—it’s responding naturally, making the experience feel immersive. AI can bring this same level of responsiveness to everyday software—imagine interfaces that adapt and reconfigure based on what you need in the moment.

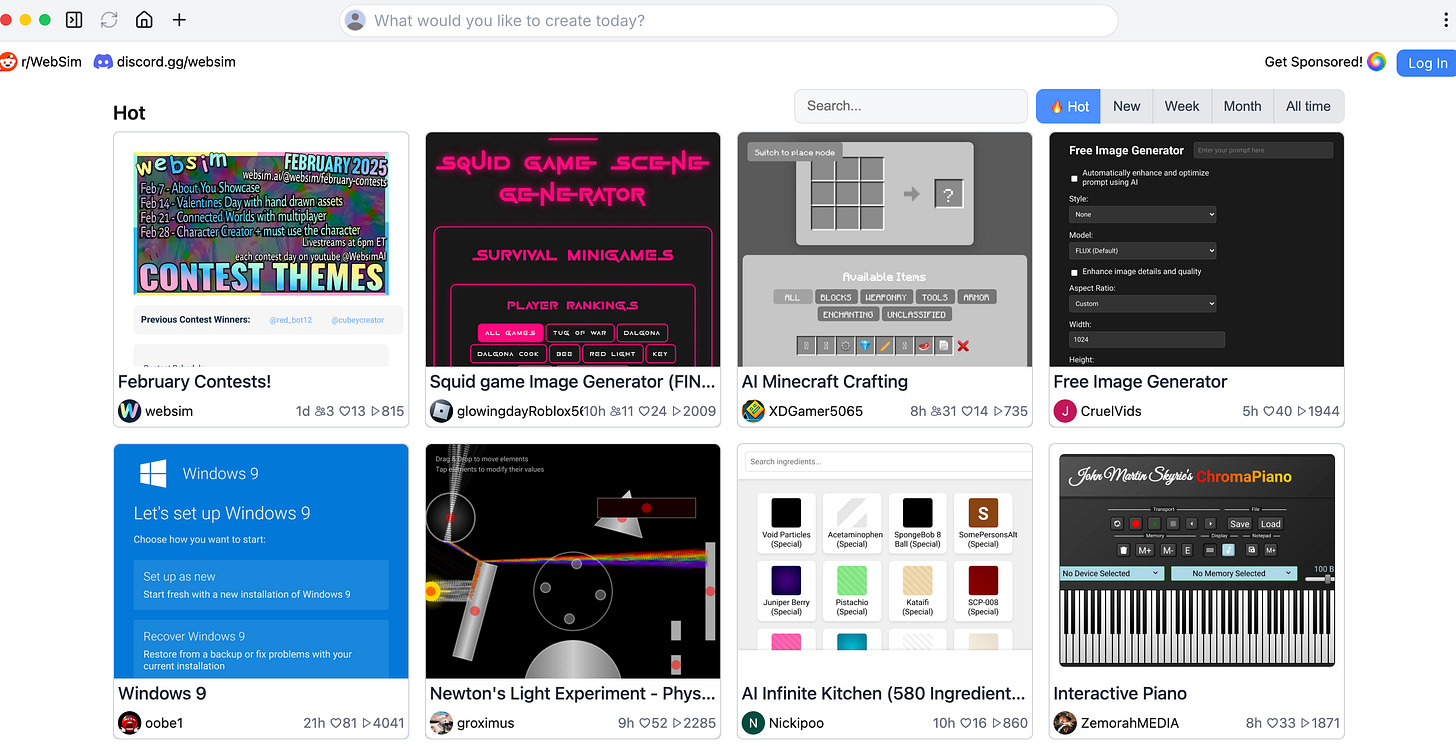

Glimpses of this future are already popping up. Websim, for example, allows users to create websites on the fly using natural language. Instead of selecting templates or manually arranging layouts, the interface generates itself dynamically.

Looking ahead, software could anticipate your needs and surface the right tools at the right time—just as a game world reveals new paths as you explore. And this paradigm can mirror how game worlds largely mirrors the feeling of the real world.

The Skeptics: Is This a Good Thing?

The common objection to more dynamism is that we’d lose the predictability and familiarity in well-designed software. Ami Vora talks about how important familiarity is to making products feel simple, and how that helped scale some of our most heavily used products like WhatsApp.

I’m not discrediting the importance of these things. Those are paramount. The world of video game design teaches us we don’t need to compromise familiarity for fluidity. We can do this by establishing rules. In video games, creators give the world rules and physics — to define how things interact rather than prescribing every possible action. This extends also into how content is created in digital worlds, using techniques like procedural generation.

AI can let software designers take a similar approach. We can define systems that adapt in real time. We can give our products rules and physics that guide how our users (e.g. the players) explore. It should never feel like users are bumping into rigid constraints the way they do today. The software world can also unfold naturally, rather than feeling largely static.

The Next Step in Software Design

I’m excited about the next step in the arc of software design becoming more fluid. I think I’d enjoy using software that feels like less work and instead more fun, delightful. Software shouldn’t feel like clicking through static menus — it should feel like entering a world that unfolds in response to your needs. I am excited because I see that generative AI can make this shift possible.

The question for designers can evolve from not just “what screens should we build?” but to “what physics should define our world?”.

In part 3, I’ll complete this series by talking around abstracting in using software with agents. We’ll connect the dots across personalization, fluidity, and AI agents redefining the future of software. Stay tuned!