Prompting Is Becoming Interface Design

Why the future of AI products is less about clever inputs and more about designing flows that shape intent and structure model behavior

TL;DR:

Prompt engineering is evolving from a clever input trick into a real product design problem. This post explores how prompting is changing. Moving beyond writing the perfect words and into systems that help users express intent clearly and make model behavior more predictable. I cover prompting modes, new interaction patterns, and how to design interfaces that shape both what goes in and what comes out of a model.

You Are the Bottleneck

Earlier this week, Google released a slew of new generative models. I finally tried out Veo 3 , which is their latest video generation model. It was incredible:

As I was messing around with various prompts to generate insanely realistic videos…I thought about how we try the latest models. We attempt to pull out this intelligence that we know exists in the model. But we struggle to come up with the right word to convey our intent. The idea that exists in our heads.

When I get a crappy response from a model, I usually ask: “is the model failing me? Or am I the bottleneck?” With how good the models are getting, it’s often the latter. I remind myself: the output is only as good as the input. Garbage in, garbage out.

Yet using AI still feels like fumbling around in the dark. We’re trying to turn a stateless, black-box model into a tool, thought partner, or reliable system. We take inspiration from prompt templates and tips. We experiment. Prompt engineering isn’t new. It’s already become an AI buzzword, usually described as:

The practice of designing inputs to language models in a way that reliably guides them to produce useful, accurate, or desired outputs.

But prompt engineering is evolving beyond crafting the right words. It’s becoming a design problem.

It not only draws on your ability to write clearly, but also to think in systems. My point of view is that as models grow more capable, the burden of prompting “well” should shift from users to the systems and interfaces we use. We should expect new forms of interaction to emerge for both model input and output.

Why This Matters

As AI tools become more integrated into products, the ability to guide a model toward the right outcome isn’t just a technical skill—it’s a design opportunity. Helping users express intent clearly, and structuring what comes back, is how AI will shift from being impressive to actually being useful. This post breaks down what that shift looks like.

Prompting Modes: Zero-shot, Iterative, Chain-of-Thought, System

Before we look at how prompting is evolving, it's helpful to break down the modes most users rely on today. Today, we mostly have 4 modes of prompting: zero-shot, iterative, chain-of-thought, and system. Each reflects a different way of steering the mode: through directness, iteration, structure, or context.

Let’s imagine a hypothetical example of using an LLM to help you plan a trip. You want a list of the top 5 things to do in Paris.

1. Zero-shot prompting

With zero-shot prompting, you ask the model directly for a response, and that’s it. You don’t provide any output examples of what you’re looking for and expect the model to rely on its pre-trained context to answer correctly.

Example:

Prompt: "What are the top 5 things to do in Paris?"

Output: "1. Visit the Eiffel Tower. 2. Explore the Louvre Museum. 3. Walk down the Champs-Élysées. 4. See the Notre-Dame Cathedral. 5. Relax in Luxembourg Gardens."

In this example, the result is immediate. If you didn’t specify that you want the “top” things, or if you left out “Paris,” the response might not match your expectations. There are extensions of this mode including one-shot and few-shot prompting where you do include examples.

2. Iterative prompting

Iterative prompting is when you use the model’s initial response to refine your next prompt, iterating until you get a more refined, satisfactory answer. You can theoretically do this for a long time until you got tired or you exhausted your token limits. It’s similar to having a human-like conversation.

Example:

First prompt: "What are the top 5 things to do in Paris?"

First output: "1. Eiffel Tower, 2. Louvre Museum, 3. Champs-Élysées, 4. Notre-Dame, 5. Luxembourg Gardens."

Follow-up prompt: "Can you include activities that are less touristy and more local?"

Follow-up output: "1. Stroll through Montmartre. 2. Explore Canal Saint-Martin. 3. Visit Le Marais for art galleries. 4. Try local food at the Marché des Enfants Rouges. 5. Relax at Parc des Buttes-Chaumont."

The process of iteration is your way of steering the model with feedback. This method is effective when each answer helps you clarify your intent. It’s a process of realizing you were imprecise to start and uncovering clarity incrementally.

3. Chain-of-Thought (CoT) prompting

With CoT prompting, you encourage the model to think step-by-step before giving you an initial answer. This leads to more structured, reasoned outputs. This method is especially effective with reasoning models that take time and compute to “think more”.

Example:

Prompt:

“I’m going to Paris for 3 days. Think step-by-step to create a well-balanced itinerary that mixes sightseeing, food, and relaxation. In each step, consider my preferences for sightseeing at the beginning of the day and enjoying food at the end of the day. Assign weights to each candidate recommendation that account for these preferences. Order the final itinerary in descending order of the aggregate weight.”

Output:

Step 1: Break the trip into 3 themed days — Culture, Food, and Leisure.

Step 2: Assign activities to each day.

Day 1 (Culture): Louvre, Notre-Dame, Montmartre.

Day 2 (Food): Cooking class, Marché d’Aligre, wine tasting.

Day 3 (Leisure): Jardin du Luxembourg, spa afternoon, Seine boat ride.

Instead of jumping to a list, the model lays out a plan and fills in the details. You can think of the prompt as similar to providing an `algorithm for the model to implement. CoT prompting helps the model “reason out loud,” which is especially useful for open-ended planning tasks.

4. System prompting

System prompts allow you to set a broader context for the model right at the start. This might include specific guidelines, style preferences, or constraints that define how the model should generate its responses. These prompts are persistent and don’t require users to steer the model each time.

Example:

System prompt: "You are an experienced travel expert and local guide who specializes in uncovering lesser-known attractions, hidden gems, and authentic experiences in major cities around the world. Your recommendations avoid tourist traps and instead focus on local culture, food, neighborhoods, and off-the-beaten-path spots that reflect the true character of a city. You write in a helpful, warm, and concise tone, and you always explain briefly why each recommendation is unique or worth visiting.

Prompt: "What are the top 5 things to do in Paris?"

Output:

Musée Jacquemart-André – A beautifully preserved 19th-century mansion that offers a quieter, more intimate art experience than the Louvre, with an impressive collection of decorative arts and old master paintings.

Marché d'Aligre – A vibrant local market where Parisians shop for fresh produce, cheese, and wine. It's a great place to soak up neighborhood energy and grab picnic supplies.

Canal Saint-Martin – A scenic canal lined with quirky boutiques, cozy cafés, and footbridges. Perfect for a relaxed afternoon walk that feels worlds away from the tourist core.

Le Musée de la Chasse et de la Nature – An eclectic and beautifully curated museum combining contemporary art with historic artifacts related to nature and hunting. Surprisingly delightful.

Parc des Buttes-Chaumont – One of the city’s most unusual parks, with dramatic cliffs, a suspension bridge, and panoramic views. A peaceful retreat where locals go to escape the crowds.

By using a system prompt, you avoid needing to guide the model in each prompt. This approach primes the model’s thinking before it even sees your prompt, creating a more specialized response. It’s an efficient way to shape the conversation before the model even begins its task.

From Asking Better Questions to Building Better Systems

As models get smarter, the interface layer around them becomes even more important. We shouldn’t only expect users to become better prompters. We should also shift the burden to the interface layer.

It is the product’s responsibility to help users clarify their intent, guide the model, and deliver more reliable outcomes. We need smarter interfaces that make model behavior feel intentional, consistent, and aligned.

We want a model to feel like it understands us. That means more control, less guesswork. More determinism, less improvisation.

This shift can unfold across two layers:

How we help users assemble intent before model input

How the model structures its own behavior with output

I. Designing the Intent Layer

Even before the model sees a single token, the user is trying to express intent in writing a prompt. This process of translating an idea is the new frontier of product design to iterate on with AI-native products.

In well-designed systems, this doesn’t happen in a single step. There is a flow. And that flow is shaped by the interface, not the user alone.

Here are three emerging patterns for how intent can be shaped, structured, and delivered…all before an LLM call:

1. Client-side guided shaping

The user starts writing a prompt, but the text is steered by the client. The interface provides helpful nudges, suggestions, or constraints to make it better. The user is still actively writing the input and in full control.

You begin typing “Cool stuff to do in Paris.” The system suggests more precise categories like “scenic walks,” “local food,” or “live music.” You revise your input based on these hints before submitting it.

This mode supports intent refinement without removing the typing experience. It helps users become better prompters without needing to start from scratch each time.

2. Assisted shaping

The system takes responsibility for improving your prompt after you submit it. It intercepts your input and upgrades or restructures it behind the scenes using logic, metadata, or historical context. All this happens before submitting your prompt to the model.

You write: “Paris recommendations.” The system expands this into: “What are five local, less touristy things to do in Paris that involve food and walking?” and then sends it to the model.

You still drive the interaction, but the system ensures what the model sees is more thoughtful and specific than your original prompt.

3. Interaction-driven shaping

Finally, we can reimagine prompting as no longer unconstrained with the freedom of text. Instead, we can ask users to express intent through fixed UI elements. This takes the indeterminism of chat and translates it to a finite space. Users navigate through UI elements like toggles, filters, drop-downs. And the model input is assembled behind the scenes based on those interactions.

You select “Paris,” check “Hidden gems,” adjust pace to “Slow,” and select interests like “Food” and “Neighborhoods.” The system assembles a full prompt in the background and sends it to the model.

This is prompting without prompts. It opens up model interaction to everyone, regardless of how comfortable they are with language or specificity.

Together, these three modes form a spectrum of how user intent gets assembled before reaching the model. Each step increases system responsibility and reduces the cognitive burden on the user.

II. Structuring the Output Layer

Once intent is assembled and the prompt is sent, the interface still plays a critical role. At this stage, it’s no longer about shaping the input. The goal is to shape the user’s experience of the output.

We must help users understand what the model is doing and trust how it arrived at the response. Even when techniques like chain-of-thought prompting are used, the model alone cannot create a fully interpretable or trustworthy experience. That responsibility still belongs to the product.

Here are three emerging strategies to bring more interface design to output generation.

1. Reinforce structure in the output UI

Model outputs are often returned as long blocks of text. Interfaces can reflect a point of view on what users actually need. We can break that text into sections, highlight decision points, or organize the content into a more readable format.

A three-day itinerary becomes three collapsible cards: Day 1, Day 2, and Day 3. Each card includes a summary, map link, and editable activities.

This turns passive output into something navigable and easier to act on.

2. Show intermediate thinking when it adds clarity

When chain-of-thought prompting or multi-step planning is used, the product can choose whether or not to surface the model's intermediate reasoning. Some users prefer clean answers. Others want to see how the model got there.

The interface includes a toggle: “Show planning steps.” Clicking it reveals the model’s breakdown of how it chose activities for each day.

This lets users access reasoning without overwhelming the default experience.

3. Offer places for user correction or steering

After a response is generated, the product can invite the user to adjust or refine the output. This might mean changing priorities, removing certain ideas, or asking for a different tone.

After reviewing a generated itinerary, the interface asks: “Want more food-focused ideas?” The user selects a new focus, and the system reruns the prompt with updated guidance.

This builds a lightweight feedback loop that helps the model feel more interactive and responsive.

Together, these patterns give control over how model behavior is experienced. When paired with the intent-layer strategies, they form a complete system. One part helps users express what they want. The other part helps them understand and shape what the model gives back.

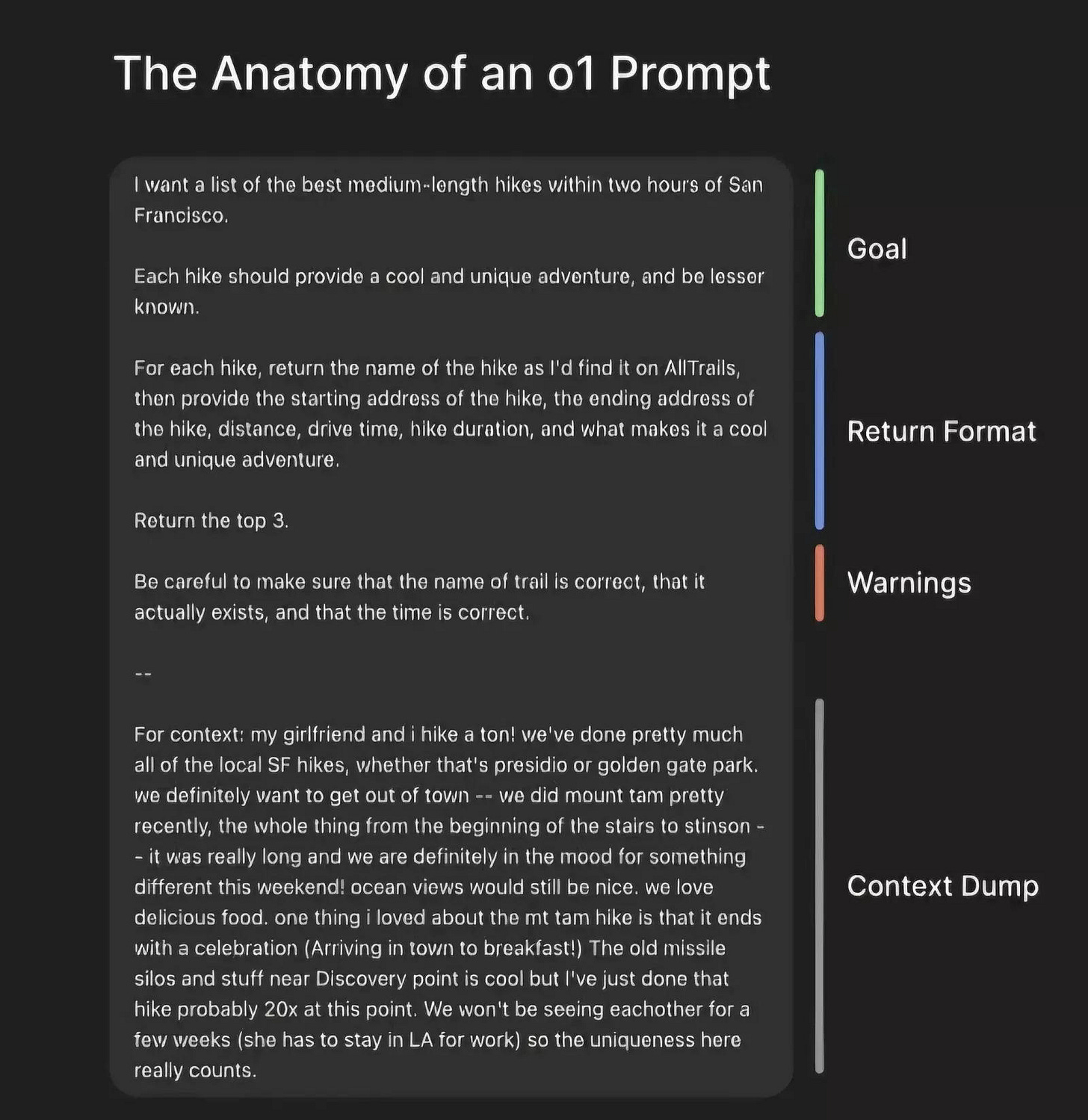

Prompting Is Becoming System Design

Prompting used to feel like a hack. You gave the model the right words, and it gave you something magical in return. But that mental model no longer fits.

As models become more powerful, prompting is evolving from a writing trick into a system-level responsibility. Products should help users express intent and shape interfaces to guide what happens next.

We are entering a new design era where:

Prompts are assembled through guided flows, not crafted from scratch

Outputs are structured and inspectable, not returned as monolithic text

Interfaces shape both input and output, not just wrapped around the model

The burden is shifting. It is moving from the user to the product.

If you are building AI products, this is where the leverage lives. The future of prompting is not about being clever with your words. It is about designing systems that understand what users mean, and giving them control over what happens next.

Very true, I find myself keeping a library of prompt “tricks” to get the outputs I want